Hand tracking in HoloLens2 is awesome but detecting gestures is not. Currently, HoloLens allows the following in-built hand gestures,

If no controllers are present then the default controllers are the user's hands.

MixedRealityPose pose;

if (HandJointUtils.TryGetJointPose(TrackedHandJoint.IndexTip, Handedness.Right, out pose))

{

// Use pose object to access the position and orientation of IndexTip

}

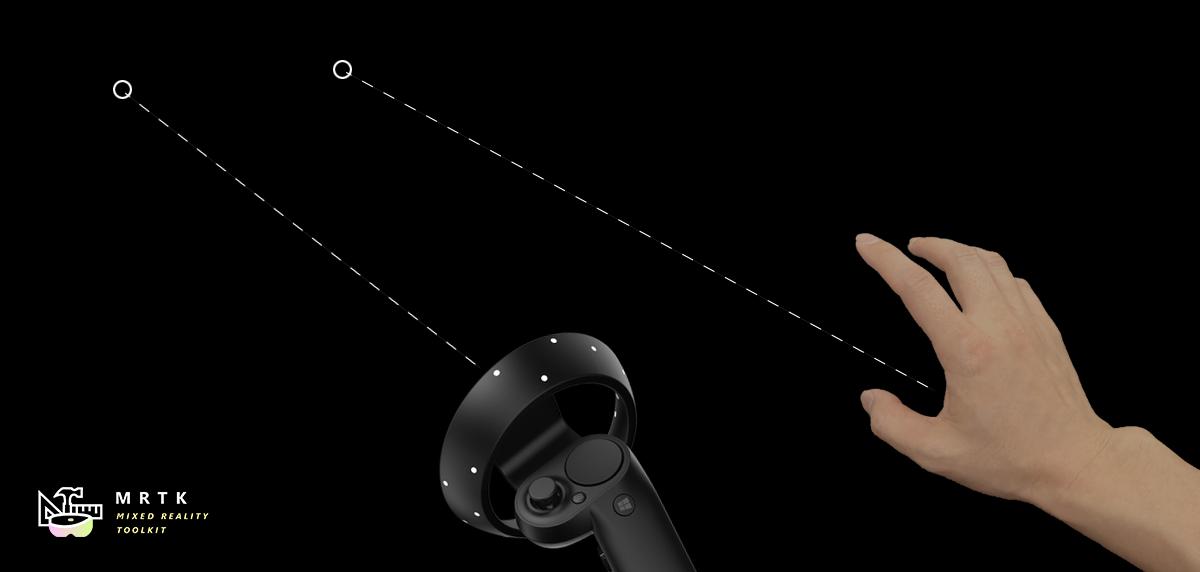

HoloLens uses *Pointers* to focus on different GameObjects.

public class FiringProjectiles: Singleton<FiringProjectiles>, IMixedRealityPointerHandler

{

void Awake()

{

CoreServices.InputSystem?.RegisterHandler<IMixedRealityPointerHandler>(this);

}

void IMixedRealityPointerHandler.OnPointerUp(MixedRealityPointerEventData eventData)

{

// Requirement for implementing the interface

}

void IMixedRealityPointerHandler.OnPointerDown(

MixedRealityPointerEventData eventData)

{

// Requirement for implementing the interface

}

void IMixedRealityPointerHandler.OnPointerDragged(

MixedRealityPointerEventData eventData)

{

// Requirement for implementing the interface

}

// Detecting the air tap gesture

void IMixedRealityPointerHandler.OnPointerClicked(MixedRealityPointerEventData eventData)

{

if (eventData.InputSource.SourceName == 'Right Hand' || eventData.InputSource.SourceName == 'Mixed Reality Controller Right')

{

// Do something when the user does an air tap using their right hand only

}

}

}

We can access the controller that led to that specific event using the MixedRealityPointerEventData object as shown above in the OnPointerClicked function. Note that by default the Air Tap gesture is a pointer gesture and hence this mapping is possible. Unity automatically maps the detected controller controls to the in-built gestures (unless specified otherwise).

We can detect the controller type using the SourceName attribute of the InputSource object as shown above. The reason we check the source name twice is the following: